RAG — Retrieval Augmented Generation, FT — Fine Tuning

Andi Sama — CIO, Sinergi Wahana Gemilang with Davin Ardian, Andrew Widjaja, Tommy Manik, Chintya Rifyaningrum, and Tjhia Kelvin; and Winton Huang — Client Engineering Leader, IBM Indonesia

The Foundation model, the large language model (LLM) trained on vast amounts of data (i.e., the majority of accessible data from the Internet), has been the core of Generative-AI nowadays since the introduction of chatGPT, a GPT-based model, in Nov 2022. Next, the data source from Internet-accessible data that the future LLMs will be trained on may come from the synthetic data (Ruibo Liu et al., 2024) that were generated by previous LLMs. Ironic, isn’t it?

LLM enables humans to communicate with AI through natural language, through text, as if we are communicating with another human being. By getting the text as the input, LLM may respond in the form of text or other inputs like code, images, audio, and video—hence the terms text-to-text generation, text-to-code generation, text-to-image generation, text-to-audio generation, and text-to-video generation.

There are application-based LLMs that can generate images and short animations from text (Leonardo.ai), generate video & voice just from text & image (Akool), as well as text to video (OpenAI Sora). Other emerging LLMs, not the extensive list, include Gemini (Google), Granite with WatsonX (IBM), Gauss (Samsung), Llama (Meta), GPT with chatGPT (OpenAI), DALL-E with chatGPT/Bing/Co-Pilot (OpenAI), and Mixtral (Groq).

Talk to an LLM

The illustration below shows how humans talk to an LLM. We feed the prompt and the query string to the LLM through an application (our App, the chatGPT application, utilizing GPT-4 LLM as the foundation model, for example). The application then vectorizes the prompt using the embedding APIs provided by the target LLM. This is the embedding, converting the text prompt to a format suitable for the input the target LLM expects.

To quote the definition of embedding (IBM, 2024): “Normally, words in the prompt are vectorized by the model. Vectorization converts text to tokens and then to numbers defined by the model’s tokenizer to identify the tokens. Lastly, the token IDs are encoded, meaning they are converted into a vector representation, which is the input format expected by the model’s embedding layer.”

Prompt Engineering and Fine Tuning

We can talk directly to an LLM by providing a prompt (zero-shot prompting), i.e., asking about something; the LLM will then provide the response. Or, we can structure the prompt by providing a few samples, for example, of how the LLM should respond (a few-shots prompting).

The art of structuring our prompt before sending it to LLM is called Prompt Engineering. This will not change the pre-trained parameters in the LLM.

Further, we can do Fine Tuning, i.e., feed LLM with new data, then retrain. This changes the trainable parameters in pre-trained in the LLM.

Zero-Shots Prompting: A sample of text-to-text generation using OpenAI chatGPT

(Generated on April 5, 2024, by the accessible version of chatGPT, accessed from the web browser on Windows)

Prompt

Please describe Generative AI as if I were five years old.

Please limit your response to a maximum of 300 words.Response (chatGPT)

Imagine you have a magic crayon that can draw all sorts of amazing pictures

without you even telling it exactly what to draw. Generative AI is like that

magic crayon, but for computers. Instead of drawing with colors on paper,

it uses numbers and patterns to create all kinds of things like pictures,

stories, or even music!

Just like how you learn to draw by looking at lots of pictures and practicing,

generative AI learns by looking at lots of examples and figuring out how to

make similar things on its own. It's like having a super smart friend who

learns from seeing lots of pictures, stories, or songs, and then can make

new ones that are similar but different.

So, when you see a cool drawing or read an interesting story that was made

by a computer, it's like that computer used its magic crayon to create

something new and exciting all by itself!Zero-Shots Prompting: A sample of text-to-text generation using OpenAI chatGPT

(Generated on April 6, 2024, by the accessible version of chatGPT, accessed from the web browser on Windows)

Prompt

Please describe Generative AI as if I were a graduate student.

Please limit your response to a maximum of 300 words.Response (chatGPT)

Generative AI is a branch of artificial intelligence focused on creating

new data based on patterns and examples from existing data.

It employs techniques like deep learning to generate content such as images,

text, or music that is original and often indistinguishable from

human-created content.

At its core, generative AI utilizes models, typically neural networks,

to learn the underlying structure of a dataset. These models then generate

new data by sampling from the learned distribution. For instance,

a generative model trained on a dataset of human faces can create entirely

new faces that are realistic and diverse, despite never having seen those

specific faces before.

One common approach in generative AI is the use of Generative Adversarial

Networks (GANs). In GANs, two neural networks, the generator and the

discriminator, engage in a kind of "cat-and-mouse" game. The generator

creates samples, while the discriminator tries to distinguish between real

and fake samples. Through this iterative process, both networks improve,

resulting in the generator producing increasingly realistic samples.

Another popular method is Variational Autoencoders (VAEs), which learn

a latent representation of the input data and then generate new samples

by sampling from this learned latent space.

Generative AI finds applications in various domains, including art generation,

text generation, drug discovery, and data augmentation. Its ability to create

new, realistic data has implications for content creation, data synthesis,

and even addressing data scarcity issues in certain fields. However, ethical

considerations regarding the potential misuse of generative AI, such as

generating deepfake videos or spreading disinformation, are significant

areas of concern that researchers and policymakers must address.A Few-Shots Prompting: A sample of text-to-text generation using OpenAI chatGPT

(Generated on April 7, 2024, by the accessible version of chatGPT, accessed from the web browser on Windows)

Prompt

Convert country to country's heritage.

Indonesia -> Batik

Italia -> Pisa Tower

France -> Eiffel Tower

Thailand ->Response (chatGPT)

Thailand -> Wat Pho

Thailand's heritage is exemplified by Wat Pho,

a historic temple complex in Bangkok renowned for its stunning architecture,

intricate decorations, and the famous Reclining Buddha statue.Zero-Shots Prompting: A sample of text-to-code generation using Microsoft Co-Pilot

(Generated on April 5, 2024, by Microsoft Co-Pilot, a preview version on Windows)

Prompt

Create a Python code to print integer numbers from 1 to one million.Response (Co-Pilot)

Certainly! Below is a Python code snippet that prints integer numbers

from 1 to one million:

Python

for num in range(1, 1000001):

print(num)

AI-generated code. Review and use carefully. More info on FAQ.

This code uses a for loop to iterate through the range of numbers

from 1 to 1,000,000 (inclusive) and prints each number.

You can adjust the range as needed for your specific use case.

Feel free to run this code in your Python environment to see the output. 😊Traditional AI and Generative AI

Traditional AI — Training, Transfer Learning, and Inferencing

In the traditional deep learning model with millions of parameters, there is a mechanism to use the pre-trained model as a base to train our modified model by retraining the pre-trained model with the new data, enabling the model to be updated with the new unseen data. This transfer learning is typically done by retraining a few last layers of the neural network with the new additional dataset. With a few high-end Graphics Processing Units (GPUs) to do the transfer learning, we can have the newly trained model in just hours or days.

However, the original model's training still requires many GPUs, tens to hundreds of high-end GPUs, with days or weeks of training times. This requires a high computing cost.

Running the model, or what we call inferencing, requires fewer GPUs. A few GPUs would be enough to perform inferencing for typical light applications by serving just a few concurrent requests at a reasonable computing cost.

Generative AI — Training the Foundation Model

In today's generative LLM-based AI with billions to trillions of parameters, it is not uncommon for the training dataset to be huge. The foundation model in generative AI is the LLM-based model that has been pre-trained with a vast dataset, such as the whole accessible content of the Internet. Training a foundation model requires a considerable computing cost.

Regarding computing requirements, training the foundation model requires tens to hundreds of thousands of high-end GPUs over several months, beyond the reach of even world-class corporations (or Governments). Only the big guys who can spend hundreds of millions to billions of USD (United States Dollars) in research and development (R&D) can afford such a massive infrastructure.

Generative AI — Leveraging the Foundation Model

Here come the new terms for utilizing the base model: the foundation model. We can use it as-if (directly) or modify the foundation model by retraining the pre-trained model with some new additional dataset to understand the local context more.

Generative AI — Inferencing the Foundation Model

Inferencing, the foundation model, would also be challenging, as the computing requirement to run such a vast foundation model with billions of parameters still needs at least a few high-end GPUs. If the capacity to serve the user request in parallel increases, the GPU requirement would be aligned with the requested capacity. Still, there is a considerable computing cost to do the inferencing.

Let's see the illustration of a user talking to an LLM through an application. LLM, the GPT-4 foundation model, runs in the cloud, serving multiple users. The front-end application is chatGPT, which allows the user to interact.

A typical flow would be as follows.

- (1) The user constructs a prompt (text) and submits a request to the LLM (GPT-4) through the application (chatGPT).

- (2) chatGPT does the embedding process by converting the prompt to a vector (vectorizing the prompt). chatGPT tokenizes the prompt (converts the prompt to tokens), converts the tokens to numbers, and finally encodes the vector in the format expected by the target LLM. Note that different LLMs have different ways of tokenizing, converting to numbers, and encoding the prompt.

- (3) A model serving service that runs the LLM receives the request and responds to the application.

- (4) Upon receiving the response to the request, the application passes the response to the user. In the case of chatGPT, for example, the response is displayed on the screen.

Hallucination in LLM

LLM trained on a non-curated dataset may create problems, such as hallucination when trying to answer a question in which LLM does not have the ground truth to the answer (the answer was not in the training dataset that LLM was trained on). A hallucination occurs when LLM tries to make up the answer and develop a fabricated and convincing answer that seems right.

Inserting External Information into the Foundation Model

One way to reduce hallucination is to provide local context for the answers that we seek for, i.e., inserting external information into the foundation model, the pre-trained LLM. Instead of directly passing the prompt to the LLM, we provide local context for possible answers by querying the local database first through the Retrieval Augmented Generation (RAG) method.

RAG does not modify the foundation model; it provides local context to the pre-trained LLM by inserting external, high-quality local data from the content store. This method allows the LLM to answer the query considering additional data provided within the enhanced query.

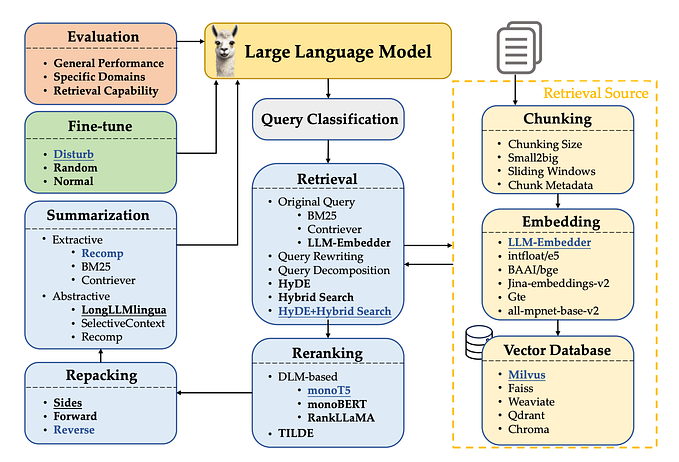

RAG — Retrieval Augmented Generation

First, the local context (content store) needs to be prepared. Our local context, which may be text, PDF, image, audio, video, etc., needs to be converted into text. Certain data preprocessing may also be necessary, e.g., removing duplicates.

Once the essential preparation for the local data is completed, it is vectorized (embedded process) through the LLM-provided API (Application Programming Interface) and stored in the content store (hosted in the vector database).

Considering the LLM's context window size, we may need to chunk the text before embedding it so that the request fits within the LLM's context window. For example, the LLM with a context window size of 16K tokens can handle 75% x 16K words, meaning 75% x 16,000 = 12,000 words.

Then, when the content store is ready, a typical flow would be as follows. Note that steps 1, 3, and 4 were the same. Steps 2a and 2b have been added, and step 2c (previously step 2) has been modified to include local context from the content store.

- (1) The user constructs a prompt (text) and submits a request to the LLM (GPT-4) through the application.

- (2a) The application queries the content store by passing the vectorized prompt as the query text.

- (2b) The content store returns the list of matching queries as vectorized responses. k is the number of returned items.

- (2c) The application combines the vectorized prompt with the vectorized responses from the content store and then sends this as a request to LLM with the local context.

- (3) A model serving service that runs the LLM receives the request and responds to the application.

- (4) Upon receiving the response to the request, the application passes the response to the user.

Inserting local context with RAG does not change the trained parameters of the pre-trained model.

Supervised Fine Tuning

To encode additional context to the pre-trained LLM, we can fine-tune and modify its trainable parameters by retraining the model with new samples from external high-quality local data, usually in the form of {prompt, response} pairs.

The retraining process in fine Tuning requires considerable GPU resources, even though it only leverages the foundation model, not doing the training from scratch.

Fine Tuning modifies the foundation model in supervised mode. Hence, the name Supervised Fine Tuning. By this method, the trainable parameters of LLM are permanently updated with new context from the additional samples, typically 10,000 to 100,000 samples (Chip Huyen, 2023).

Summary

Training the foundation model (the large language model, LLM) from scratch is beyond the capability of general enterprises unless they can provide the resources needed to train it (infrastructure, people, and data).

Providing the necessary infrastructure would be a huge challenge, as with the increasing parameters in the trained model, training that requires thousands or tenths of thousands of GPUs would be unreachable except for large enterprises that can spend billions of USD in research and development. However, there is a trend to reduce the number of parameters, keeping them in billions rather than going to trillions — such as Mixture of Experts, the Mixtral foundation model.

To conclude, with the Retrieval Augmented Generation (RAG) approach, we can leverage the trained model by inserting external information, the "local context." According to Chadha, Aman, and Jain, Vinija (2020), "In summary, RAG models are well-suited for applications where there's a lot of information available, but it's not neatly organized or labeled."

References

- Andi Sama, 2023, "Generative AI — The Exciting Technology Leapfrog in 2023 and Promising Years Ahead", https://andisama.medium.com/generative-ai-the-exciting-technology-leapfrog-in-2023-and-promising-years-ahead-fa9505099169.

- Andrew Widjaja, Andi Sama, 2023, "[Generative-AI] Experimenting with GPT-4 and Dall-E 3," https://andisama.medium.com/generative-ai-experimenting-with-gpt-4-and-dall-e-3-6beada36323e.

- Chadha, Aman and Jain, Vinija, 2020, "Retrieval Augmented Generation," https://aman.ai/primers/ai/RAG/

- Chip Huyen, 2023, "RLHF: Reinforcement Learning from Human Feedback," https://huyenchip.com/2023/05/02/rlhf.html.

- IBM, 2024, "Methods for tuning foundation models," https://www.ibm.com/docs/en/cloud-paks/cp-data/4.8.x?topic=studio-methods-tuning.

- OpenAI, 2024, "Creating video from text," https://openai.com/sora.

- Ruibo Liu, et al., 2024, “Best Practices and Lessons Learned on Synthetic Data for Language Models,” https://arxiv.org/abs/2404.07503.

- Winton, 2024, “Startup Framework for Implementing Generative AI in Enterprise,” https://www.linkedin.com/pulse/startup-framework-implementing-generative-ai-winton-winton-nqyyc.